linear regression is perhaps one of the most well known and well understood algorithms in statistics and machine learning.

Isn’t it a technique from statistics?

Predictive modeling is primarily concerned with minimizing the error of a model or making the most accurate predictions possible, at the expense of explainability. We will borrow, reuse and steal algorithms from many different fields, including statistics and use them towards these ends.

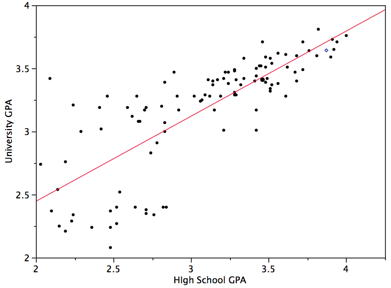

The representation of linear regression is a equation that describes a line that best fits the relationship between the input variables (x) and the output variables (y), by finding specific weightings for the input variables called coefficients (B).

For example:

We will predict y given the input x and the goal of the linear regression learning algorithm is to find the values for the coefficients B0 and B1.

Different techniques can be used to learn the linear regression model from data, such as a linear algebra solution for ordinary least squares and gradient descent optimization.

Linear regression has been around for more than 200 years and has been extensively studied. Some good rules of thumb when using this technique are to remove variables that are very similar (correlated) and to remove noise from your data, if possible.

It is a fast and simple technique and good first algorithm to try.

–EOF (The Ultimate Computing & Technology Blog) —

loading...

Last Post: How to Solve Matrix Chain Multiplication using Dynamic Programming?

Next Post: How to Maxmize Profits to Robbering in a Binary Tree using Dynamic Programming Algorithm?